In today’s data-driven economy, businesses thrive on accurate, location-based insights that help them expand strategically, analyze competitors, and improve customer engagement. One of the most powerful platforms offering such opportunities is Google Maps, a hub of geospatial and business information. Organizations, researchers, and analysts increasingly rely on Google Maps data scraping to gather structured information from this platform.

While Google provides some degree of access through official APIs, businesses often need a more tailored approach. This is where Google Maps API scraping becomes essential, especially for companies seeking enriched and customized data outputs that align with specific business use cases.

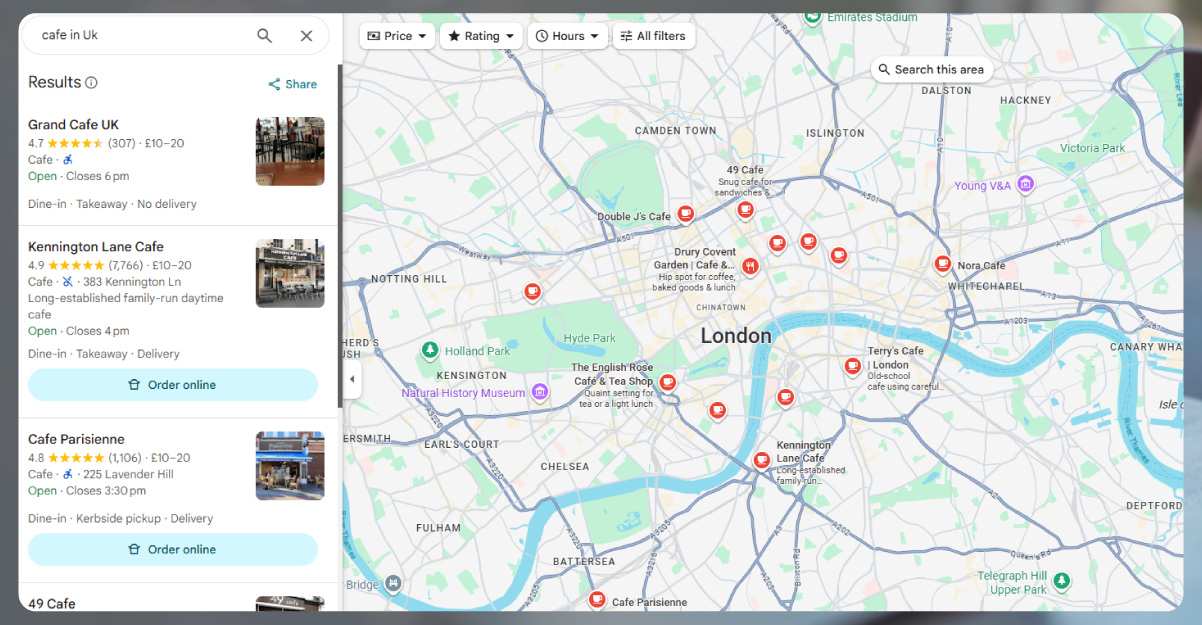

For example, marketers, franchise owners, and real estate consultants often need to scrape Google Maps location data to discover potential store sites, analyze consumer density, or monitor nearby competitors. By doing so, they unlock valuable datasets that go beyond the standard information displayed on the map itself.

Unlock the power of location intelligence—contact us today to get accurate, real-time Google Maps data tailored to your business needs.

Google Maps scraping involves several steps:

Scraping from platforms like Google Maps requires careful adherence to ethical and legal frameworks. Businesses must:

Many companies turn to Web Crawling Services to ensure they maintain compliance while extracting useful datasets.

Similarly, specialized Web Scraping Services help organizations gain structured and reliable information without investing in complex in-house scraping infrastructures.

Retail and E-Commerce

Retailers depend on Google Maps data scraping to track competitor store locations, assess potential customer footfall, and plan expansion strategies. By analyzing store clusters and consumer accessibility, they can identify profitable areas for new outlets, optimize supply chains, and align product availability with demand patterns, ensuring greater competitiveness and improved customer satisfaction across markets.

Hospitality and Tourism

Hotels, restaurants, and travel operators use scraped datasets from Google Maps to monitor competitor pricing, service offerings, and customer reviews. This information helps them benchmark performance, refine marketing strategies, and enhance guest experiences. By analyzing ratings and location details, they gain insights into consumer preferences, enabling better decision-making for pricing, promotions, and destination positioning.

Real Estate

Real estate agencies rely on Google Maps data scraping to evaluate neighborhood amenities, nearby schools, healthcare facilities, and transport links. Such insights help agents showcase property value to buyers and investors. With access to detailed location datasets, agencies can identify emerging hotspots, assess livability scores, and align property recommendations with client needs, increasing closing rates.

Logistics and Supply Chain

Logistics companies use map data scraping to improve route planning and reduce delivery delays. By analyzing store locations, customer addresses, and traffic trends, they can optimize delivery paths for efficiency and cost savings. This data-driven approach helps businesses cut fuel costs, reduce transit time, and streamline supply chains for better service reliability and performance.

Healthcare

Hospitals and clinics leverage Google Maps scraping to assess service coverage, identify underserved regions, and plan branch expansions strategically. By analyzing location data on existing facilities and nearby amenities, healthcare providers can close accessibility gaps, optimize patient reach, and improve resource allocation. This leads to enhanced healthcare delivery and stronger community engagement in targeted regions.

While the benefits are vast, scraping Google Maps also comes with challenges:

Overcoming these challenges requires advanced tools and APIs, combined with expertise in parsing geospatial data.

1. Proxies & Rotation

Scraping Google Maps from a single IP address can trigger detection, CAPTCHA, or temporary blocks, disrupting workflows. Proxies help mask the original IP, while rotating them ensures requests appear to originate from different sources, minimizing risks of being flagged. Residential or datacenter proxies improve stability and request speed, supporting large-scale projects. Using proxy rotation not only protects against bans but also enhances efficiency, allowing scrapers to collect vast amounts of location-based data without interruptions or reduced performance.

2. Clear Requirements

Scraping too much data without a plan wastes time, resources, and storage. Before starting, it is vital to outline the exact business goals and specify which data attributes are required. For example, marketers seeking leads may only need names, addresses, and contact details, while analysts focusing on customer experience might prioritize reviews and ratings. A clear requirement framework avoids unnecessary clutter, reduces processing loads, and speeds up data analysis, ensuring businesses extract meaningful insights directly aligned with strategic objectives.

3. Automated Cleaning

Raw scraped data usually contains errors, inconsistencies, or duplicates that can reduce accuracy if left unchecked. Automating cleaning ensures data integrity while saving valuable time. Scripts can be designed to standardize formats, validate details like phone numbers or websites, and remove repeated entries. They can also detect incomplete or suspicious records for manual review. Automating this step enhances quality, creating clean, reliable datasets ready for analytics. This not only improves insights but also prevents costly errors in decision-making processes.

4. Regular Monitoring

Google Maps data is never static—new businesses open, old ones close, and reviews change daily. One-time scraping quickly becomes outdated, reducing its usefulness for strategic decisions. Regular monitoring ensures information remains current and accurate. Automated schedules, like weekly or monthly updates, keep datasets fresh for timely insights. This approach is particularly valuable for industries like hospitality, retail, or real estate, where real-time accuracy impacts revenue. Continuous monitoring helps businesses stay informed, adapt quickly, and maintain a competitive advantage.

5. Cloud Infrastructure

Scraping Google Maps often generates massive datasets that require efficient storage and processing power. Cloud infrastructure offers a scalable and cost-effective solution for handling such volumes. Platforms like AWS, Azure, or Google Cloud provide secure storage, real-time data pipelines, and machine learning tools for advanced analysis. Teams benefit from easy collaboration, remote accessibility, and automatic scalability as needs grow. Using cloud solutions ensures that businesses can manage scraped data seamlessly, integrate it with analytics dashboards, and support future expansion effortlessly.

Google Maps scraping is an invaluable approach for businesses that thrive on geospatial insights, customer sentiment monitoring, and market intelligence. From competitor analysis to lead generation, the applications are diverse and impactful.

Outsourcing to Web Scraping API Services ensures that businesses gain reliable, structured, and scalable data without violating ethical norms. Furthermore, the rise of machine learning makes it essential to Extract AI Data for predictive modeling and advanced analytics.

Finally, as search engines and map platforms become more integrated, businesses must also consider the role of Search Engine Data Scraping Service for holistic digital intelligence strategies. By aligning these approaches, organizations not only enhance decision-making but also future-proof their data-driven strategies in an increasingly competitive landscape.

Experience top-notch web scraping service and mobile app scraping solutions with iWeb Data Scraping. Our skilled team excels in extracting various data sets, including retail store locations and beyond. Connect with us today to learn how our customized services can address your unique project needs, delivering the highest efficiency and dependability for all your data requirements.