In the fast-paced world of eCommerce, staying ahead of the competition requires monitoring and analyzing data from various sources. Web scraping eCommerce websites is a valuable technique for extracting data from eCommerce websites, whether for competitive analysis, market research, pricing insights, lead generation, or data-driven decision-making.

However, data scraping eCommerce websites can be challenging, especially using local browsers. Common issues include IP blocking due to excessive requests, rate limiting, a lack of proxies leading to easy detection, CAPTCHA challenges, and difficulty handling dynamically loaded website content.

eCommerce data scraper can overcome these challenges. This specialized tool solves these problems, making web scraping smoother and more efficient. It offers access to a vast pool of residential and mobile IPs, enabling IP rotation to reduce the risk of blocking. Additionally, it can distribute requests across multiple IPs, addressing rate-limiting issues and automating proxy management for uninterrupted scraping. It also enhances privacy protection and mimics user behavior, making detecting and blocking scraping activities harder for websites.

The initial step to scrape e-commerce website using Python involves identifying the target website's URL. In this blog example, we'll demonstrate the web scraping process using the Puma e-commerce website. We will focus on scraping data related to MANCHESTER CITY FC Jerseys currently available for sale.

You can access the specific URL here: https://in.puma.com/in/en/collections/collections-football/collections-football-manchester-city-fc.

Step 1: Installing Necessary Libraries

Ensure you have the required libraries installed and ready for your Python environment. These include libraries for handling HTTP requests, parsing HTML content (BeautifulSoup), and working with CSV files.

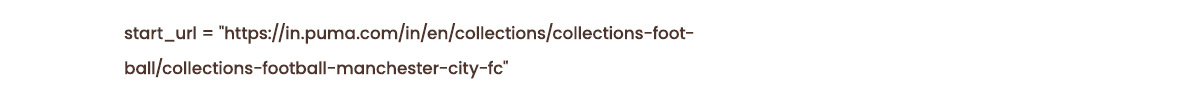

Step 2: Define the Starting URL

Specify the initial URL from which the web scraper will extract data. In our scenario, this starting URL corresponds to the page showcasing MANCHESTER CITY FC Jerseys currently on sale.

Step 3: Initiating the Scraping Process

Now, let's set things in motion. Our next objective is to access the designated start URL, retrieve its content, and locate the product links. The following two lines of code are employed to accomplish this.

Generate a Response Object is generated upon making the HTTP request, encapsulating various response details like content, encoding, and status. This information is stored within the web_page variable, allowing us to proceed with parsing using BeautifulSoup.

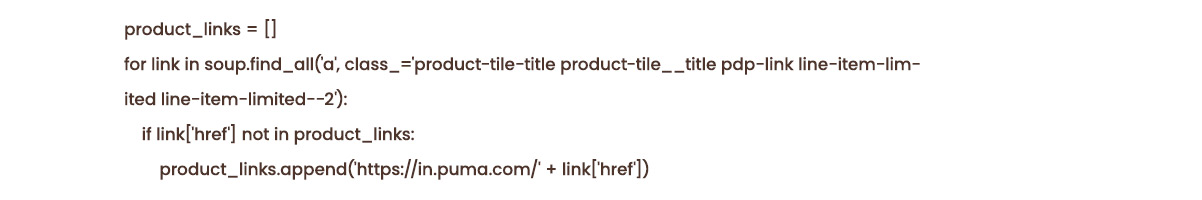

3. Extracting Product URLs

Our e-commerce data scraping services traverse the HTML content and identify the product URLs. Add these URLs to a list for further processing. CSS Selectors play a pivotal role in this task, as they enable the selection of HTML elements based on criteria such as ID, class, type, and attributes.

Upon inspecting the page using Chrome Developer Tools, we observed a standard class shared among all product links.

We employ the soup to retrieve all the product links from the page based on the shared class.find_all method. Accumulate these links are then accumulated in the product_links list.

It's essential to complete the URLs available on the page. To create valid URLs, we append the first part, "https://in.puma.com/."

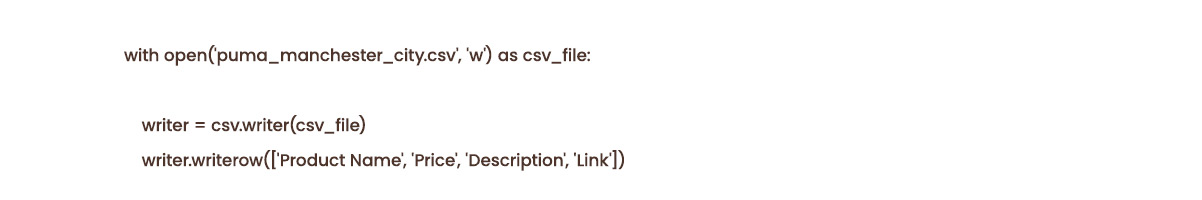

Before we commence parsing the URLs extracted in the previous step, preparing the data for storage in a CSV file is crucial. Use the following lines of code for this data preparation process.

The data is written to a file named "puma_manchester_city.csv" utilizing a writer object and the .write_row() method. This step ensures the extracted data is systematically organized and saved for further analysis.

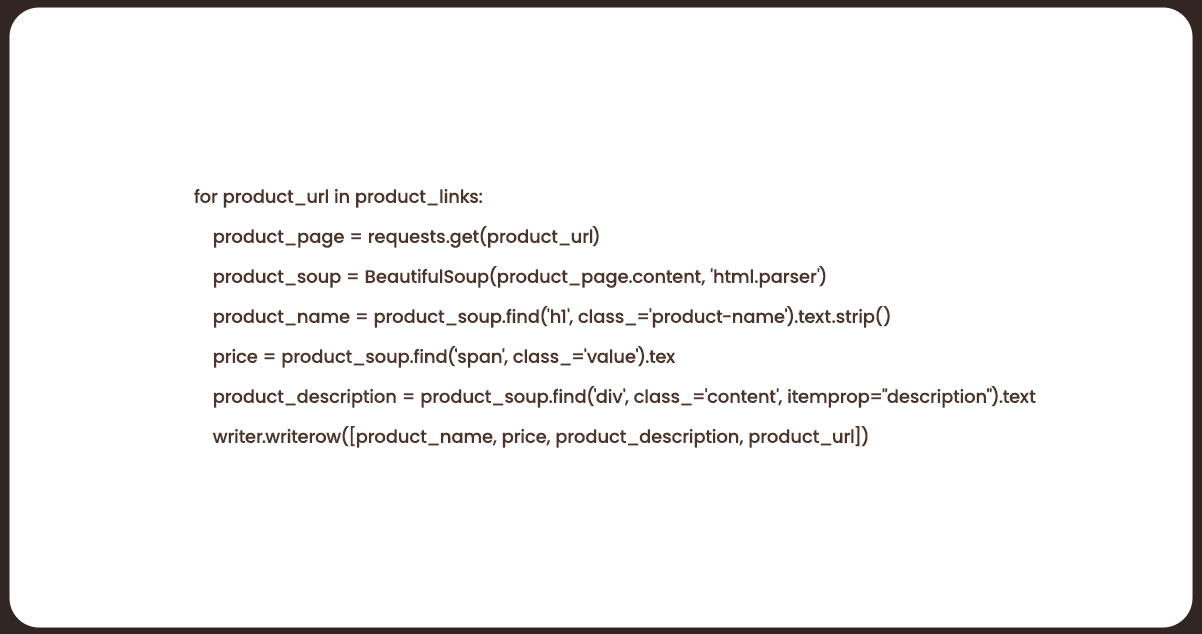

In the subsequent step, we iterate through each product URL within the product_links list, parsing them to extract valuable information. This parsing process is essential for collecting data from each product page.

Upon completing these steps and executing the code, we generate a CSV file containing data from the category ‘MANCHESTER CITY FC Jerseys’. However, the data obtained may be partially clean. They may require additional cleaning operations either post-scraping or as part of the scraping process to achieve a more refined dataset.

E-commerce scraping is a valuable tool for brands worldwide, facilitating data acquisition from e-commerce websites. Leverage this data for various purposes, including competitor analysis, price monitoring across multiple Amazon sellers, and identifying new products relevant to customers. Web scraping empowers businesses with valuable insights for informed decision-making and strategic growth.

For further details, contact iWeb Data Scraping now! You can also reach us for all your web scraping service and mobile app data scraping needs.