Real estate data scraping enables individuals, investors, and professionals in the real estate industry to access valuable information for various purposes. By scraping property data from listings, market websites, and databases, it allows for comprehensive market analysis, property valuation, and trend monitoring. Real estate agents can use scraped data to provide clients with up-to-date listings and market insights, while property investors can identify potential opportunities and assess market dynamics. Researchers benefit from a vast pool of data for in-depth studies. However, it's crucial to ensure ethical and legal compliance when scraping real estate websites and databases, respecting terms of service and privacy concerns.

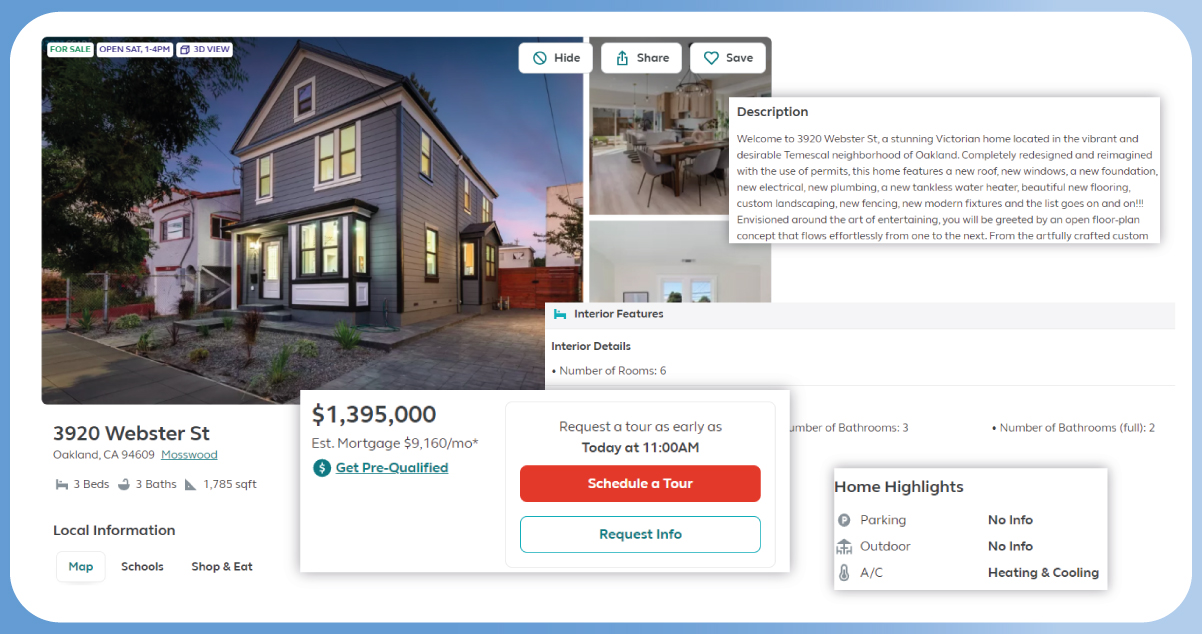

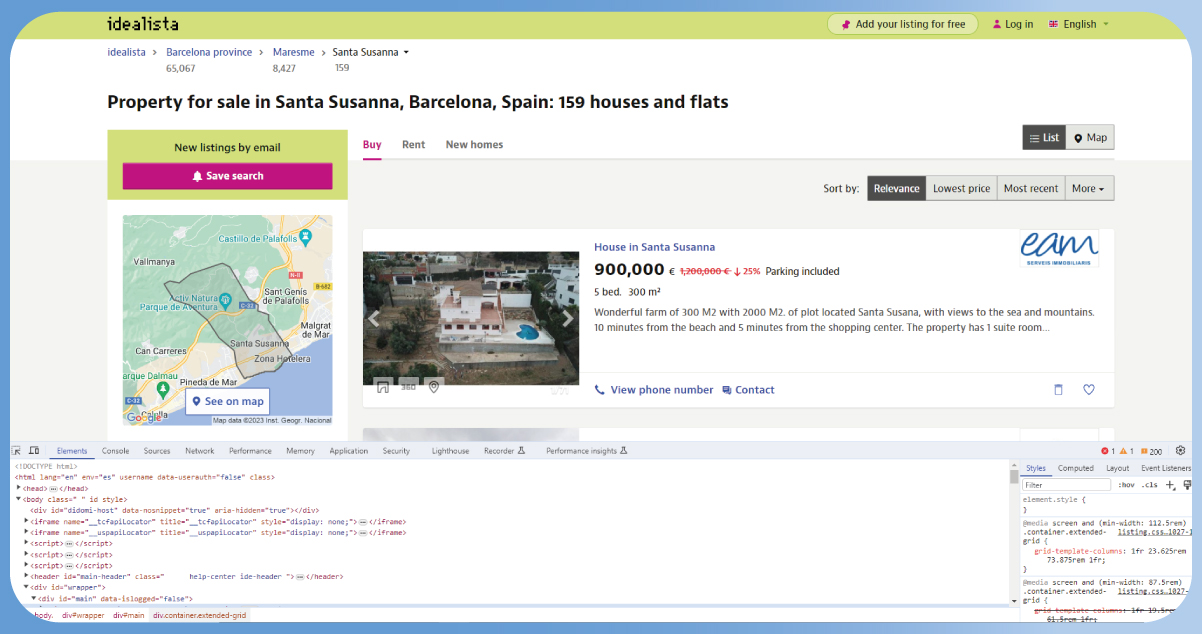

Idealista is a prominent online real estate platform that offers a comprehensive range of services for property buyers, sellers, renters, and investors. Founded in Spain, Idealista has grown to become one of the largest real estate websites in Europe, with a presence in multiple countries. It provides a user-friendly interface for listing and searching for residential and commercial properties. Users can access detailed property information, high-quality images, and neighborhood insights. Idealista also offers market trends and valuable data, making it a vital resource for real estate professionals, individuals seeking properties, and investors looking for opportunities in the European real estate market. Scrape Idealista data to gain access to a wealth of property listings, market trends, and neighborhood insights, empowering you to make informed real estate decisions, whether you're a homebuyer, seller, or investor.

Scraping the Idealista website offers numerous advantages for individuals and businesses in the real estate industry. Idealista is a popular online platform for property listings and market insights, making it a valuable data source for real estate professionals, investors, and those seeking homes or commercial spaces. Here are seven reasons to scrape real estate data:

To scrape Idealista data, you'll need to follow these steps in detail:

Ensure you have Python installed on your computer. If not, download and install it from the official Python website.

Install pip: pip install

Open your command prompt or terminal and navigate to the directory where you wish to generate your project.

Create a new project using the following command:

startproject idealista_barcelona

It will create a directory structure for your project.

Navigate to your project directory using cd idealista_barcelona.

Create a new Spider using the following command (replace my_spider with a relevant name):

genspider my_spider idealista.es

It will create a Python file for your spider in the spiders directory.

Open the spider file created in the spiders directory. You can use a code editor or IDE of your choice.

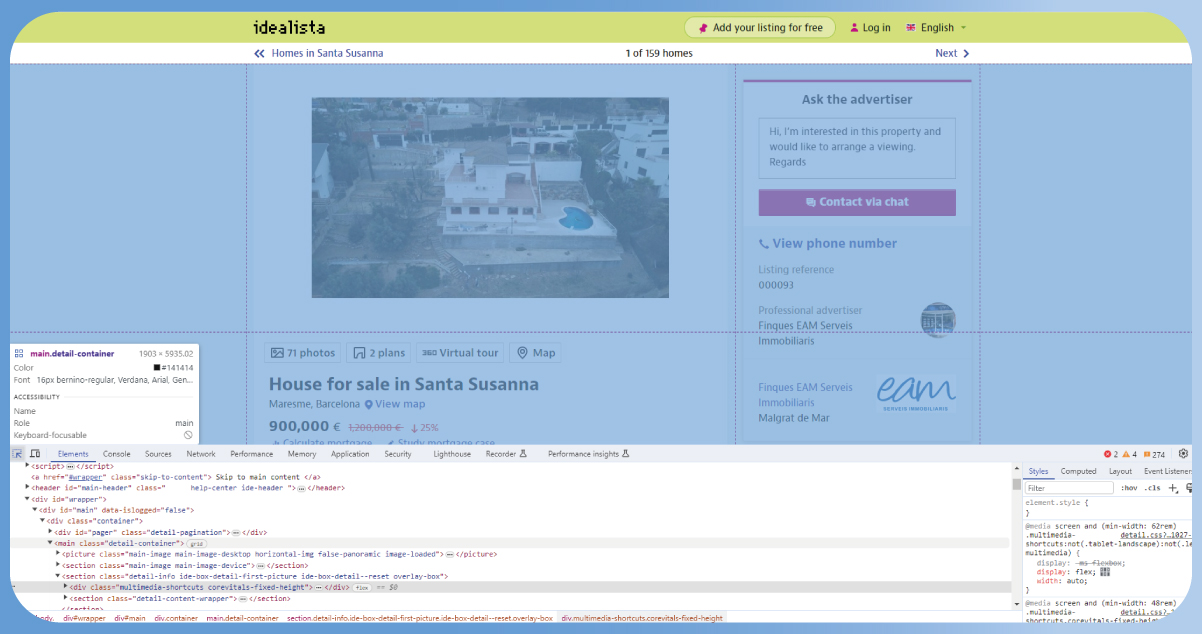

Define the spider's name, start URLs (URLs to scrape), and any other settings you need for your scraping task.

In your spider file, you will define how to navigate the website, extract data, and follow links.

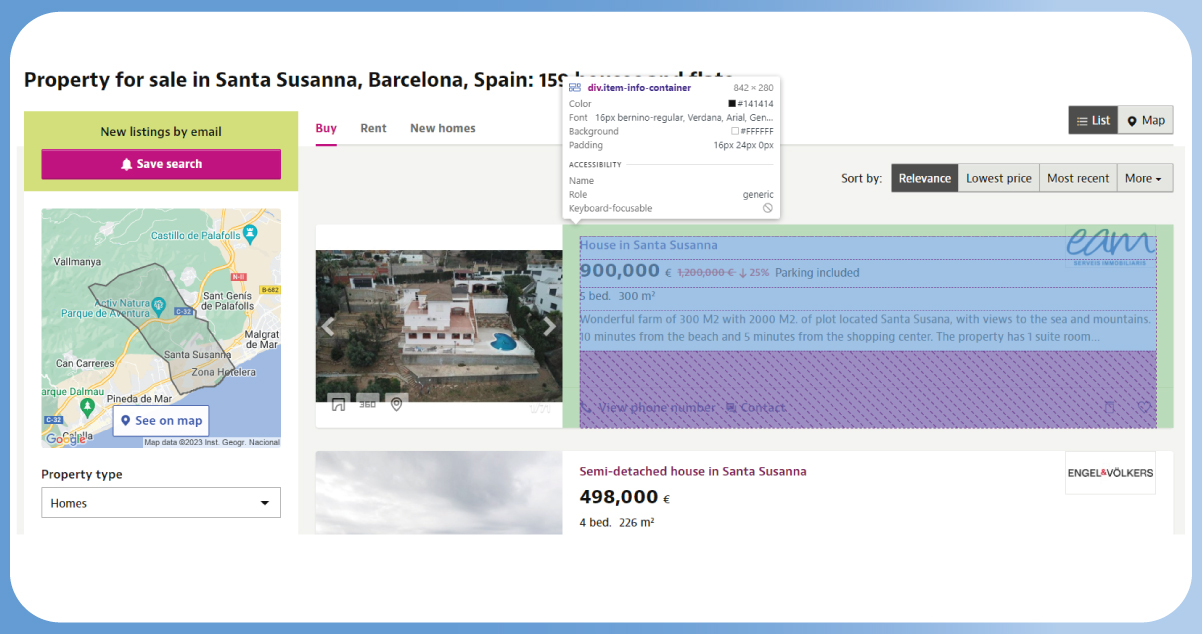

Use the selectors (XPath or CSS selectors) to specify which elements to extract from the HTML.

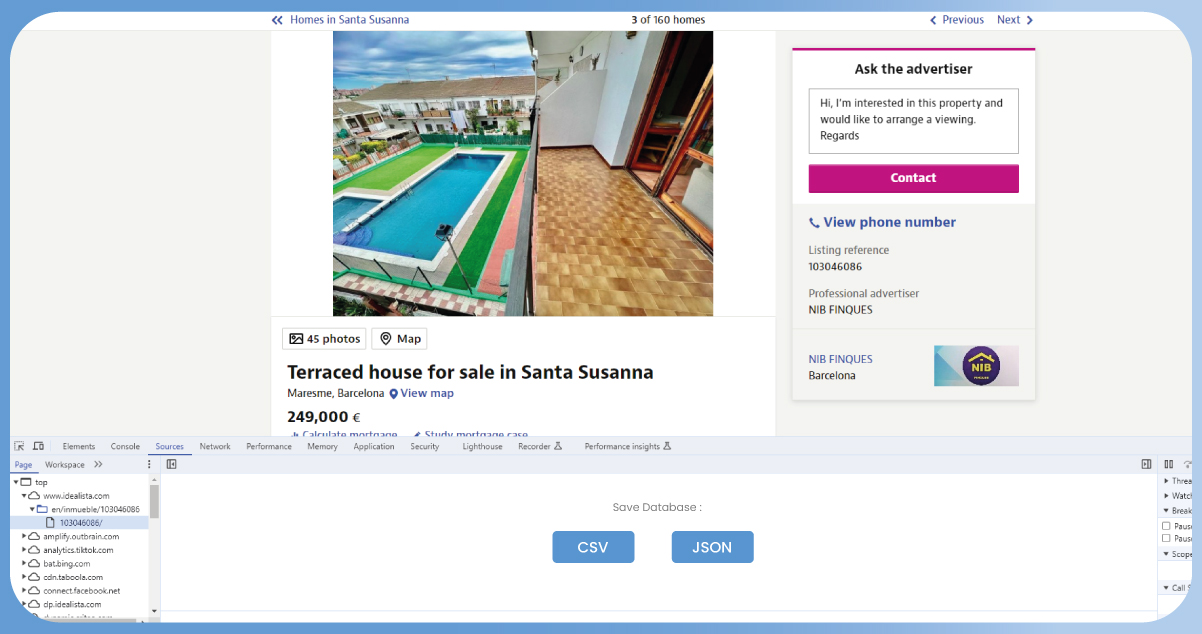

Define how to save the scraped data. You can save it to a CSV, JSON, or a database, depending on your requirements.

Implement item pipelines for data processing and storage.

Open your command prompt or terminal, navigate to the project directory, and run your spider using the following command:

crawl my_spider

Replace my_spider with the name of your spider.

As your spider runs, it will extract data from Idealista Barcelona.

Monitor the output in the terminal, and check the files where you save the scraped data for any issues.

If Idealista's listings span multiple pages, implement logic to follow pagination links and scrape data from all pages.

Use tools like Cron on Unix-like systems or Task Scheduler on Windows to schedule your spider to run at specific intervals for updated data.

Ensure your scraping activity complies with Idealista's terms of service and robots.txt file. Avoid overloading their servers or causing disruptions.

Conclusion: Scraping real estate data from Idealista is a powerful tool for real estate professionals, investors, and individuals seeking valuable property insights in markets like Barcelona. The scraped data provides a wealth of information on property listings, pricing trends, and market dynamics, enabling more informed decision-making. However, it's essential to approach web scraping with responsibility, adhering to Idealista's terms of service and ethical guidelines while also being aware of the ever-evolving nature of web data and website structures. When conducted ethically and effectively, Idealista real estate data scraping can significantly enhance one's understanding of the real estate market and lead to more informed, strategic choices.

Please don't hesitate to contact iWeb Data Scraping for in-depth information! Whether you seek web scraping service and mobile app data scraping, we are here to help you. Contact us today to discuss your needs and see how our data scraping solutions can offer you efficiency and dependability.