Discover how to use Python to extract stock price data from Zerodha Kite website software. This project will guide you in building a utility code to download stock price data in CSV format. Follow the step-by-step process to initiate your journey!

Designing a trading system requires stock price data of various timeframes in stock trading. An "Intraday Strategy Backtest" data in 1-minute to 15-minute timeframes is essential. However, such low-frequency data might not be readily available to students or learners for educational purposes. To address this challenge, a small utility code is developed that helps scraping stock price data from Zerodha Kite. The objective is to facilitate access to educational stock data for students and learners, making it easier to analyze and test trading strategies. This project is a valuable resource for those interested in learning about stock trading and strategy backtesting, enhancing their understanding of the financial markets. Here, we will provide a detailed insight into the steps involved to scrape Zerodha Kite real-time stock prices using Python.

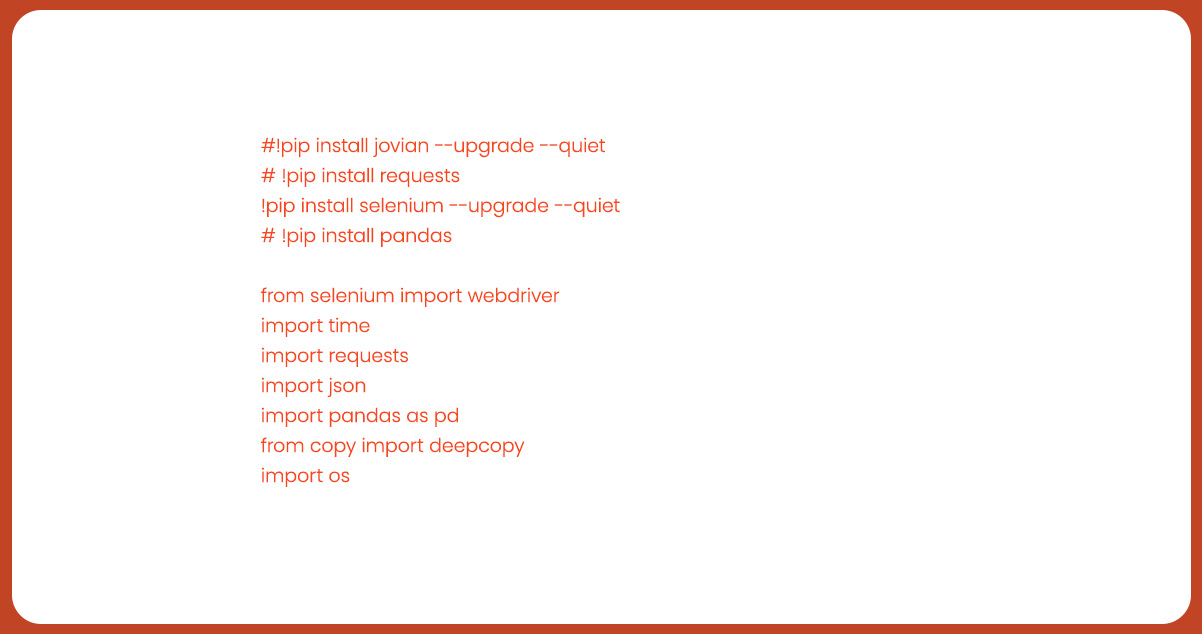

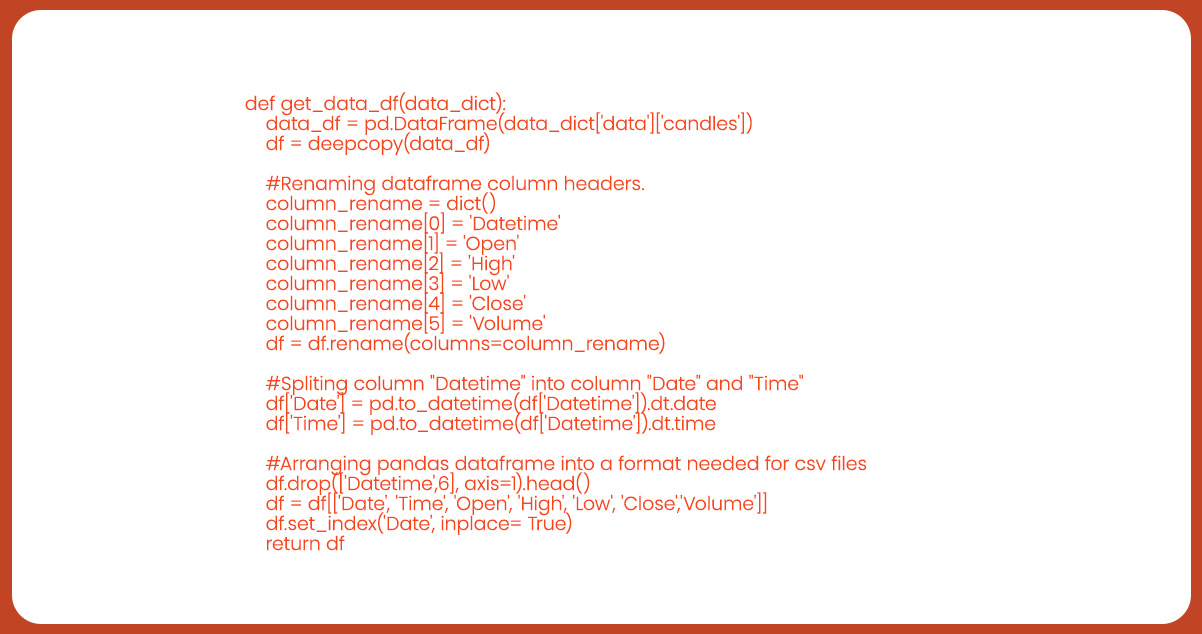

Web scraping Zerodha Kite is an automated technique for extracting large amounts of data from websites using software or scripts. Typically, it involves obtaining unstructured HTML data, converted into structured data, and stored in spreadsheets or databases. To prepare this unstructured data for analysis processes like data cleaning and formatting are applied. Various libraries like pandas, numpy, datetime, requests, and json help facilitate data cleaning and structuring tasks. These essential steps ensure the scraped data becomes valuable and ready for further analysis or integration into various applications.

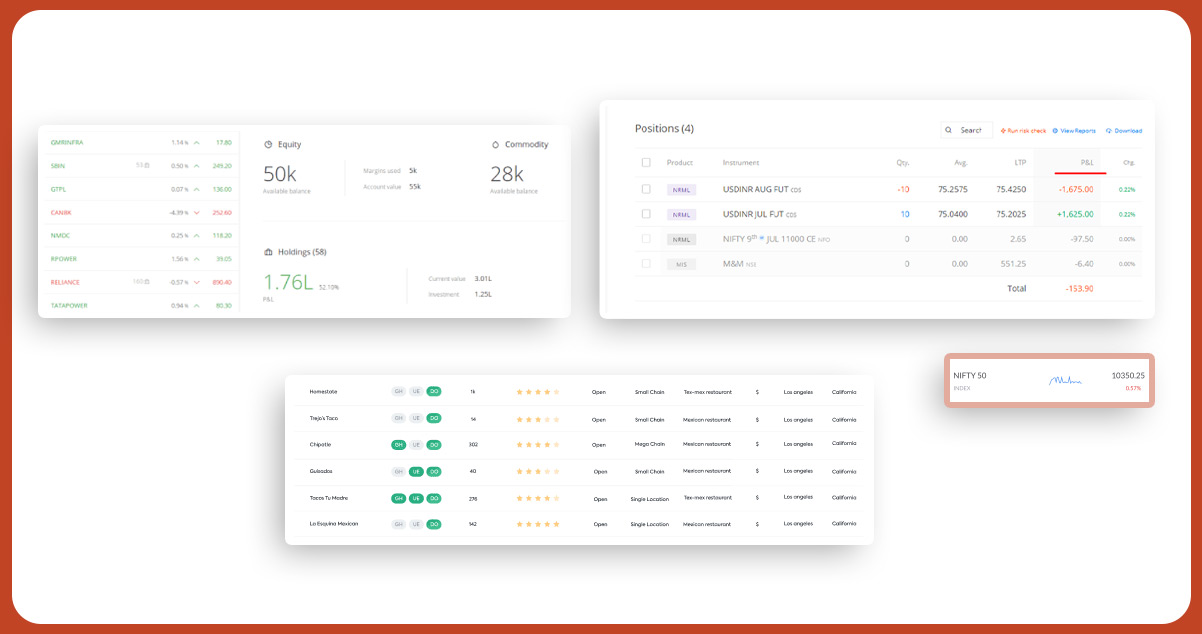

Following list of data fields are available from extracting Zerodha Kite web software.

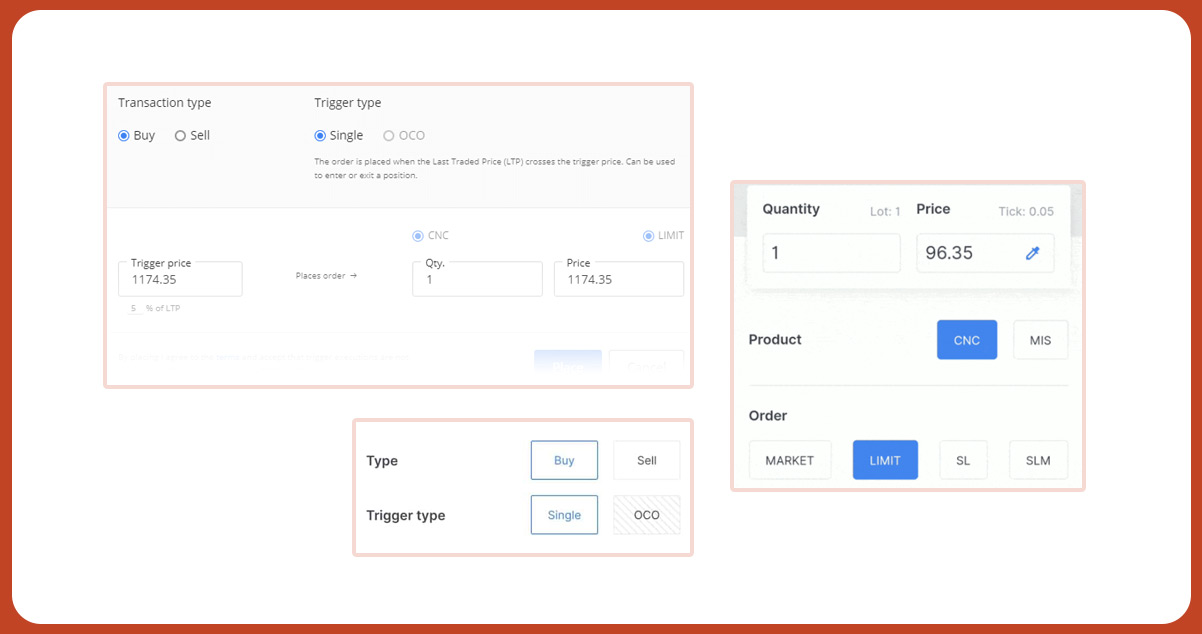

To perform web scraping from "https://kite.zerodha.com/," follow these steps:

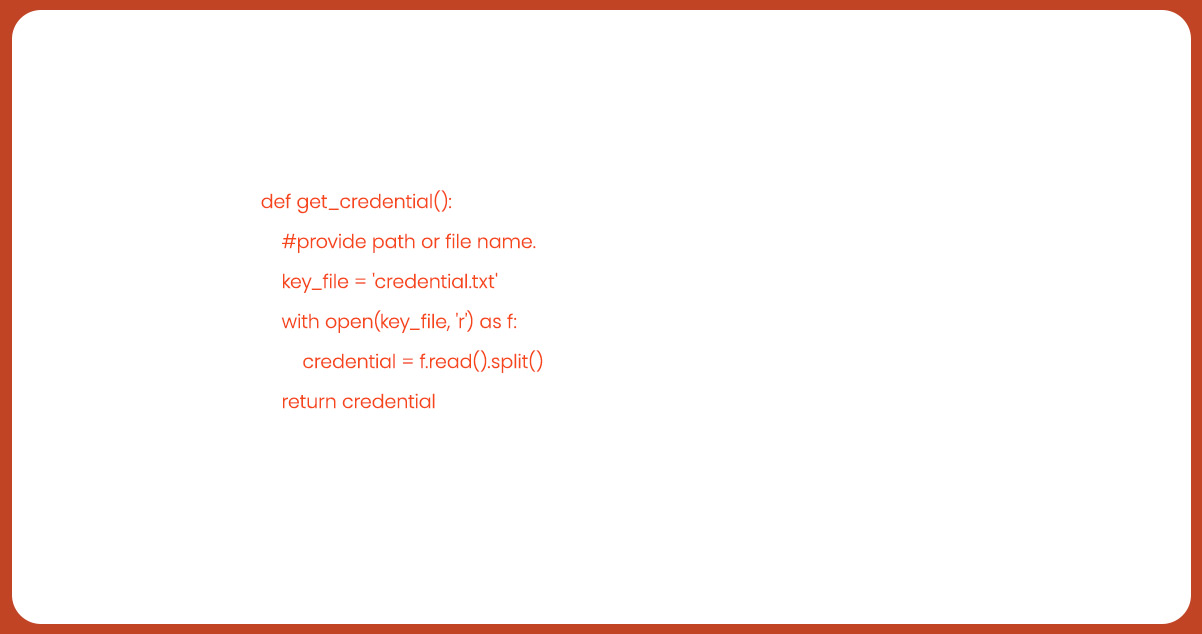

Read login credentials from a local text file for automated login.

These steps will automate the process of scraping data from the specified website and allow you to store the relevant information for further analysis or use.

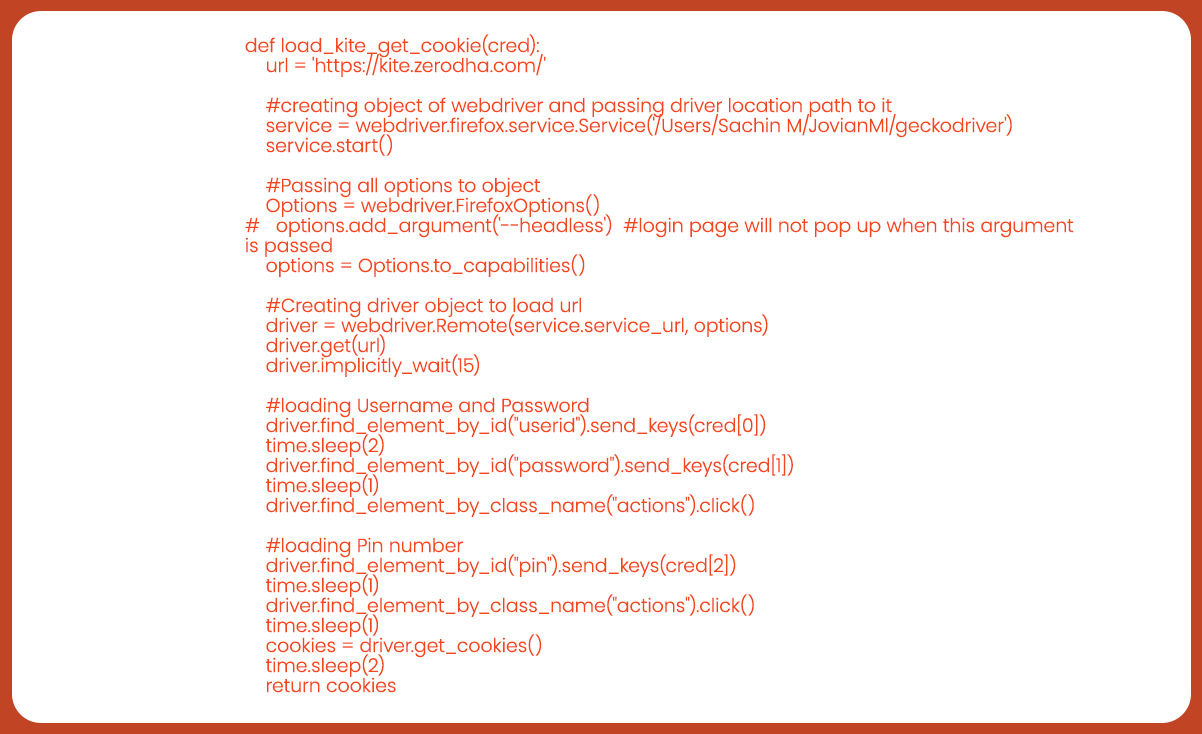

Set up the Firefox WebDriver to load the Kite login URL and enable autologin.

Create the "load_kite_get_cookie()" function, which will receive a list containing credential information obtained from the "get_credential()" function.

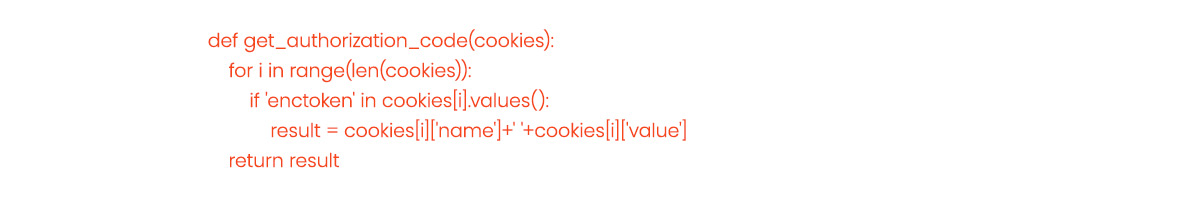

The function will return the URL cookies, which are essential for extracting the authentication code later.

Use Selenium WebDriver to load the URL and automatically perform autologin on Zerodha Kite.

Inspect the login page for auto-login, find the relevant tag, such as "user_id," and pass this information to the Selenium driver.

Similarly, obtain the class information for the login button to facilitate the autologin process.

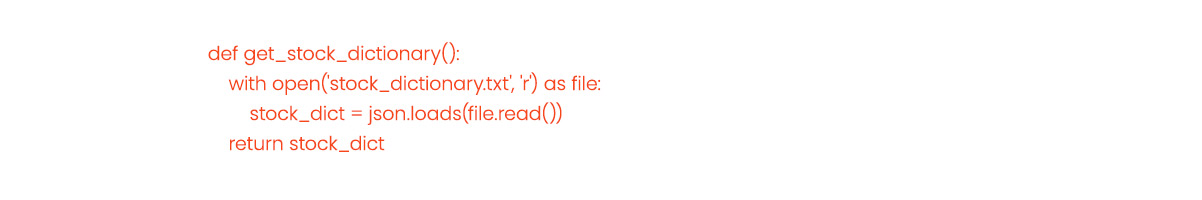

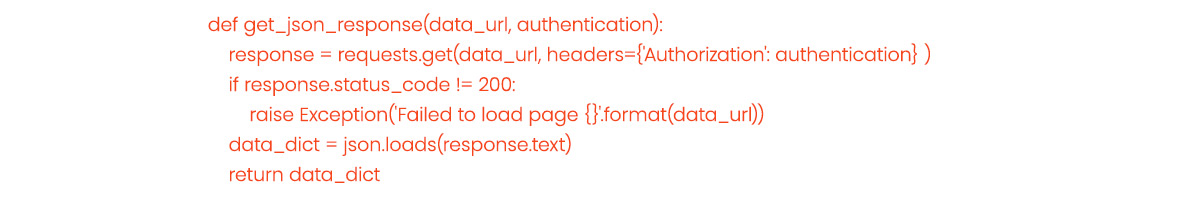

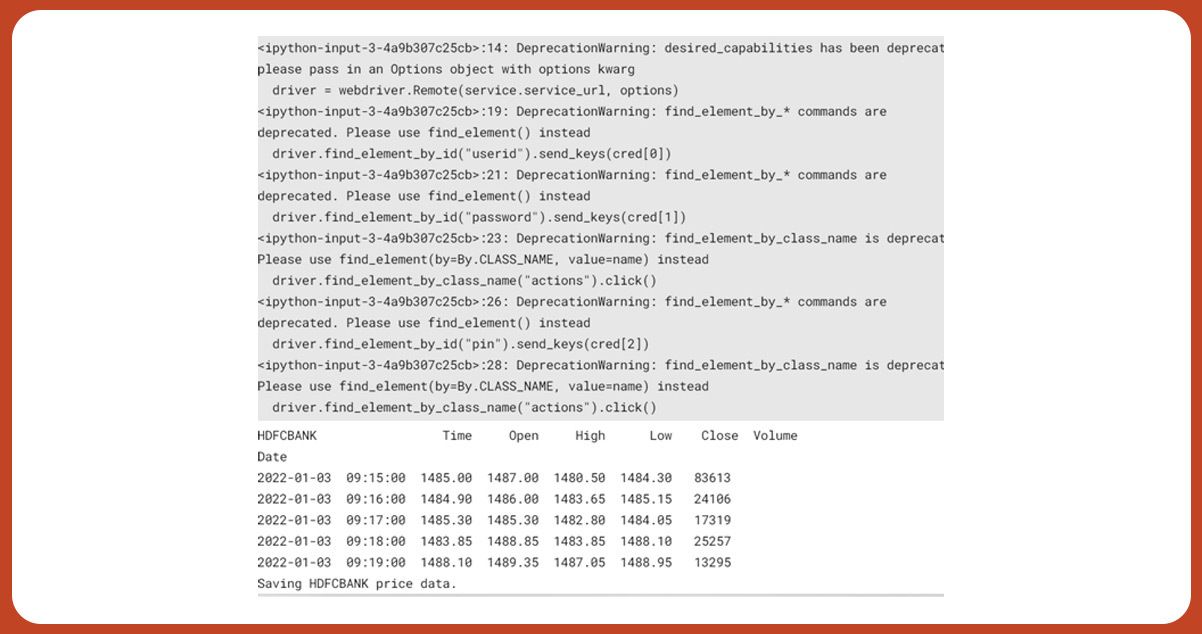

The process involves web scraping for stock prices in Python from the provided list.

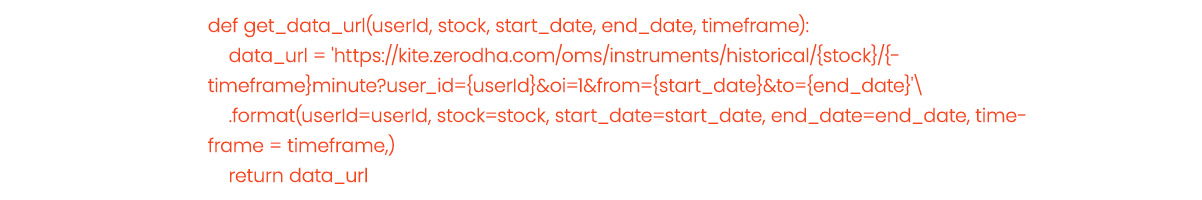

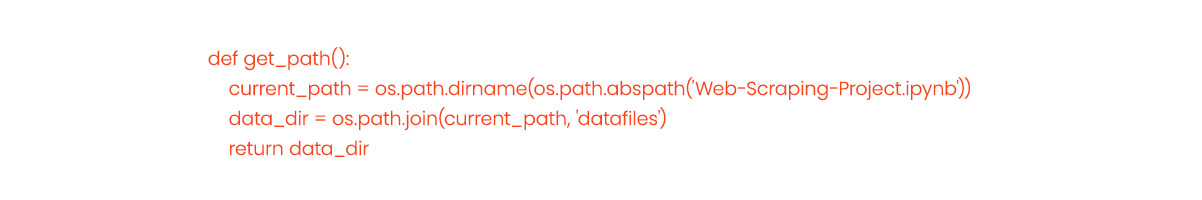

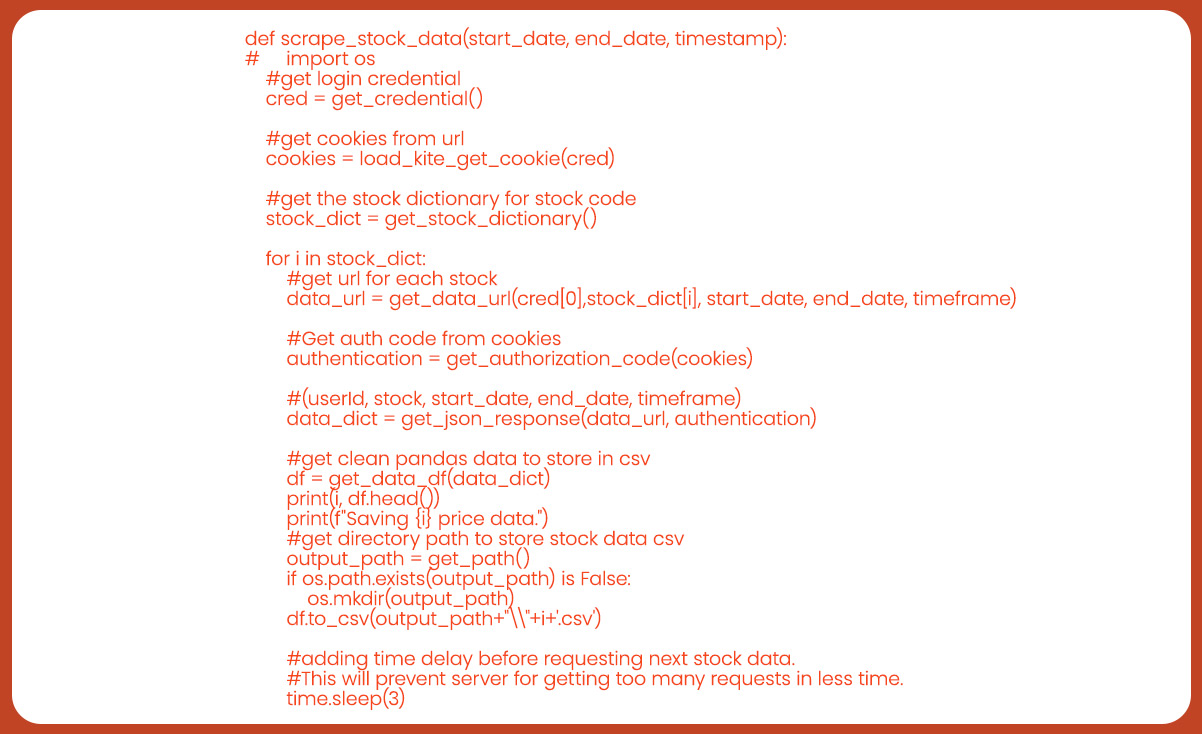

Combining all functions into a single comprehensive function, which takes the parameters start_date, end_date, and timestamp. This function will perform data scraping for all stocks, and the save the collected data in the designated directory as individual CSV files.

Execute the code to scrape stock data and save it as CSV files.

For further details, contact iWeb Data Scraping now! You can also reach us for all your web scraping service and mobile app data scraping needs