Ticketmaster Entertainment is a prominent American company specializing in ticket sales and distribution, headquartered in Beverly Hills, California. Its global presence spans multiple countries. The critical fulfillment centers in Charleston, Pharr, Texas, and West Virginia manage the company's ticket sales efficiently. Ticketmaster serves both primary and secondary markets.

Ticketmaster's clientele includes artists, venues, and promoters, offering a platform for them to control events and set ticket prices. The company facilitates the sale of tickets provided by customers, giving them access to a broad audience. Scrape ticket data from Ticketmaster to efficiently gather event details, ticket prices, availability, and more, providing valuable insights for informed decision-making and strategic planning.

Web data scraping has emerged as a popular technique for automating tasks that involve interacting with web pages. This process is invaluable for extracting essential information from various online platforms, including complex and dynamically loaded pages. Ticketmaster crawler is the recommended approach for endeavors such as gathering data from ticket and event websites.

A Ticketmaster web crawler is a sophisticated software tool designed to automate extracting valuable information from Ticketmaster's website. Through a systematic and automated approach, these crawlers navigate the web pages, gather data on events, ticket prices, venues, and related details, and present them in a structured format. By doing so, Ticketmaster data scraper alleviates manual data collection burdens and offers a streamlined solution for accessing up-to-date event information.

In this article, we will explore Ticketmaster crawlers' workings, significance, and benefits. From their role in efficient data extraction to their contribution to providing real-time updates, competitive insights, and market research, we will delve into the various dimensions of Ticketmaster crawling. Let's embark on a journey to uncover how these intelligent tools transform how we interact with the vibrant world of live events and ticket sales.

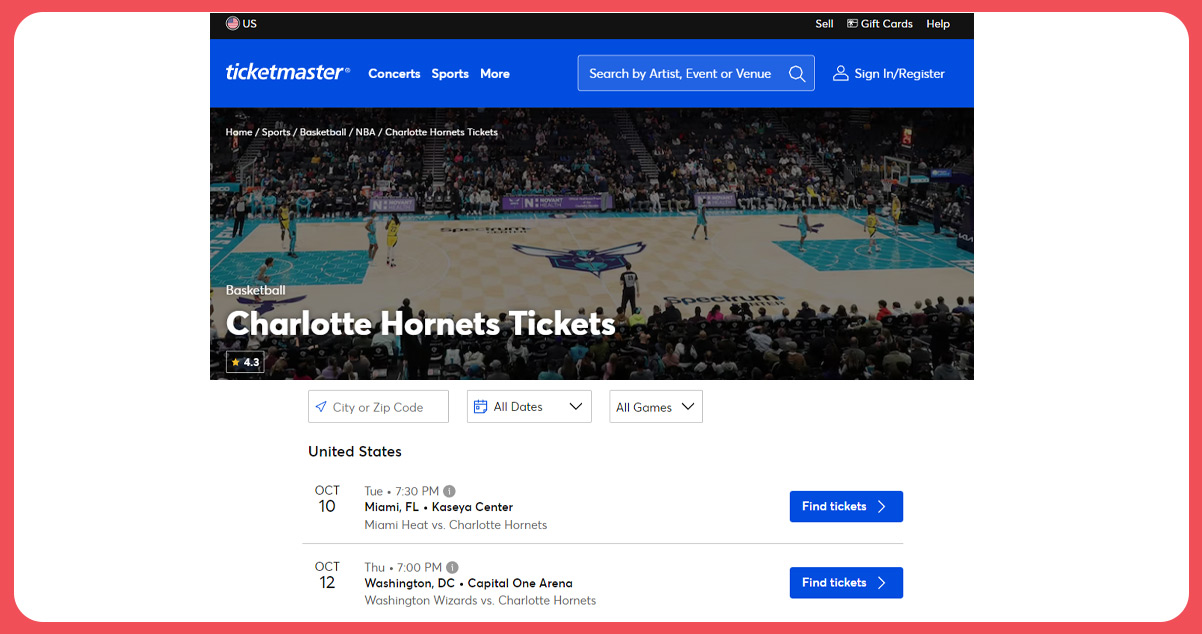

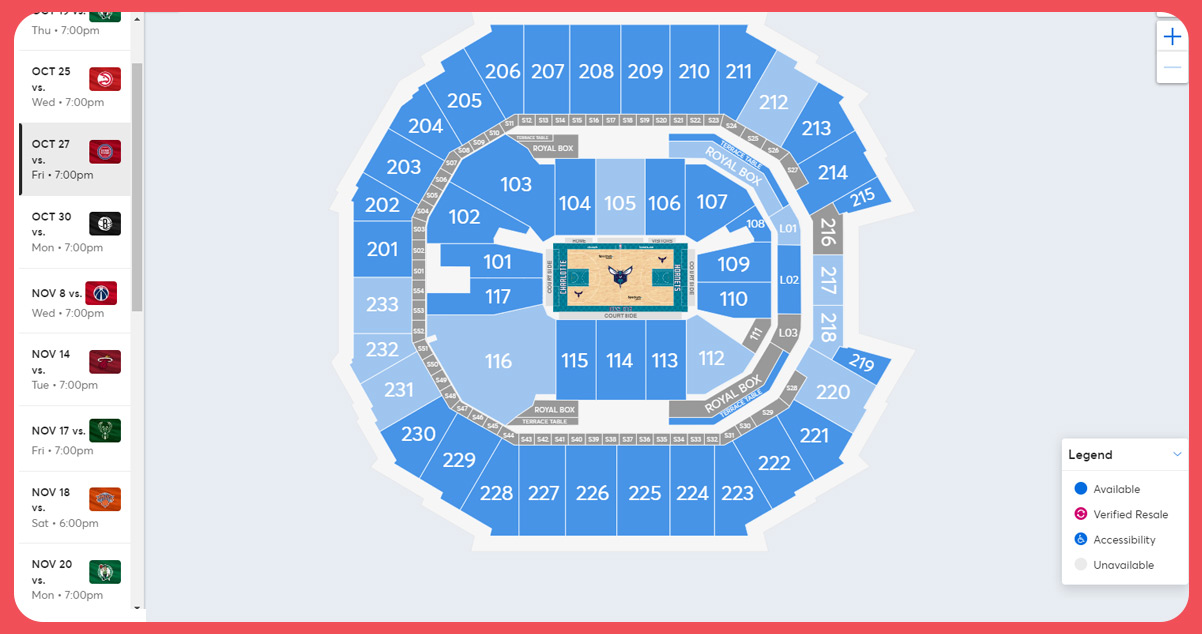

1. Efficient Data Extraction: Ticketmaster is a titan in event ticketing. With many events, diverse ticket prices, and fluctuating availability details, obtaining this information can be laborious, time-consuming, and susceptible to human error. Ticketmaster web scraping alleviates these challenges by automating the data extraction process. By navigating through Ticketmaster's pages intelligently, these crawlers systematically capture event names, dates, artist details, venue information, ticket prices, and other relevant data points. The automation ensures the accuracy and comprehensiveness of the collected information.

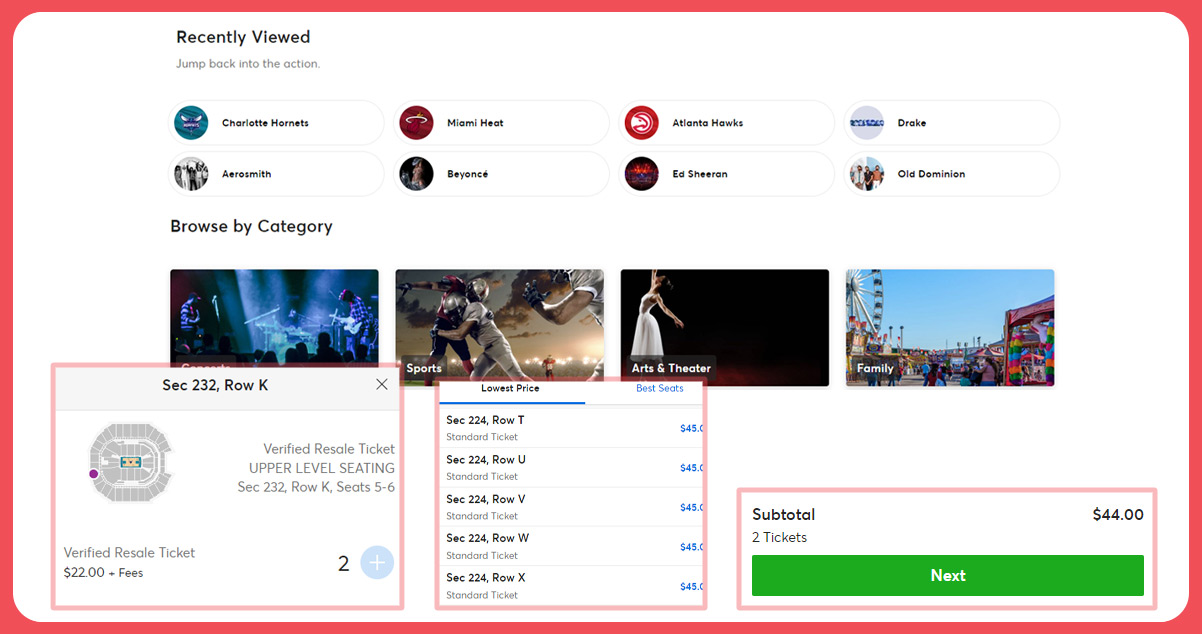

2. Real-time Updates: The ever-changing nature of events and ticket availability demands real-time information. Ticketmaster scraping API provides just that. Configured to revisit Ticketmaster's website periodically, these crawlers can capture the most current data. This functionality is crucial in ensuring that users have access to the latest event details, ticket availability status, and pricing information.

3. Competition Analysis: For artists, venues, and promoters, understanding the competitive landscape is paramount. Ticketmaster scraping services excel by collecting data on competitors' events and ticket prices. By consistently monitoring these variables, crawlers furnish valuable insights that aid strategic decision-making. This information can influence pricing strategies, event planning, and marketing efforts to gain a competitive edge.

4. Market Research: Ticket sales trends, customer preferences, and demand patterns are invaluable insights for event organizers and marketers. These insights are within the data hosted by Ticketmaster. By leveraging web crawlers, stakeholders can efficiently collect this market intelligence. The crawlers delve into historical data, allowing users to identify trends, make informed projections, and tailor their offerings to align with audience preferences.

A technically proficient Ticketmaster web crawler boasts many features that enhance its data extraction capabilities, navigational prowess, and adaptability to dynamic web environments. These features combine to create a powerful tool for efficiently retrieving and processing event-related information from Ticketmaster's website. Here are some key technical features that characterize such a crawler:

It can check for low ticket availability (less than 1% remaining).

Keeps track of which events it has checked so every scan doesn't take a ton of time and It allows one to search by artist, venue, and day of the week.

It allows you to automatically find out what the delivery methods are (WCO, Paperless, etc.) while checking the availability of tickets.

It checks for events with a large volume sold within a specific period.

Scraping data from Ticketmaster using a web crawler involves steps designed to systematically navigate the website, extract relevant information, and organize it for further use. Here's a breakdown of the typical steps involved in scraping Ticketmaster data using a crawler:

Identify Target URLs: Identify the specific Ticketmaster event pages or categories you want to scrape. These URLs will serve as the starting points for your crawler.

URL Queue Management: Create a queue of URLs to visit. This queue will dictate the order in which the crawler accesses different event pages.

Page Retrieval: The crawler accesses a URL from the queue and retrieves the HTML content of the corresponding page. It uses HTTP requests.

Parsing HTML Content: Parse the HTML using parsing libraries like BeautifulSoup or lxml. This step involves identifying the relevant data elements (event names, dates, artists, ticket prices, etc.) using CSS selectors or XPath expressions.

Data Extraction: Extract the identified data elements from the parsed HTML content. Apply parsing techniques to isolate and capture the desired information.

Pagination Handling: If the event listings are across multiple pages, implement pagination handling logic to navigate these pages and extract data from each.

Data Storage: Store the extracted data in a structured format, such as a database (e.g., MySQL, PostgreSQL) or a flat file (e.g., CSV, JSON). Organize the data into tables or categories for easy access and analysis.

Loop and Iterate: You can repeat the process through the URL queue. Visit each URL, retrieve its HTML content, and extract and store data. Continue until processing of all relevant URLs.

Error Handling: Implement error handling mechanisms to manage exceptions, timeouts, and connectivity issues gracefully. It ensures the crawler's robustness and uninterrupted operation.

Real-time Updates and Scheduled Crawling: To maintain up-to-date information, schedule the crawler to run regularly. This way, you can capture event details, prices, and availability changes over time.

Data Cleaning and Normalization: Perform data cleaning and normalization to ensure consistency and accuracy in the extracted data. Remove unnecessary characters, format dates uniformly, and address any inconsistencies.

Ethical Considerations: Ensure that your scraping practices adhere to Ticketmaster's terms of use and respect the website's policies. Implement mechanisms to avoid overloading the server with requests.

Data Analysis and Visualization: Once you've collected substantial data, use analysis tools to gain insights. Visualize trends, spot patterns, and derive valuable information to inform decision-making.

Monitoring and Maintenance: Regularly monitor the performance of your crawler, keeping an eye out for any changes in Ticketmaster's website structure that might affect data extraction. Update your crawler as needed.

Conclusion: The Ticketmaster crawler offers a dynamic solution to efficiently access and extract event data. By navigating the complexities of the website, providing real-time updates, and enabling competitive analysis, it empowers stakeholders with the insights needed for successful event management and strategic decision-making in the ever-evolving entertainment industry.

For further details, contact iWeb Data Scraping now! You can also reach us for all your web scraping service and mobile app data scraping needs