iWeb Data Scraping delivered a nationwide, sales-ready dataset of about 30,000 residential housecleaning businesses across the United States. The client’s non-negotiables were clear. Every usable record needed business name, email, phone, city, and state. A website URL was a nice-to-have when available. Sources included Google Search, Google Maps, Yelp, Craigslist. The real challenge was precision. The client wanted only residential housecleaning and maid services. They would not accept janitorial, commercial-only, pressure washing, or window-cleaning-only results. We built a layered pipeline that combined targeted discovery, structured extraction, strict classification, and multi-step QA. The end product was a clean, deduped dataset with strong email and phone coverage, mapped to every state, and ready for outreach.

Primary Objectives

Done-Right Criteria

We collected a set of must-have fields including the business name, publicly available email, phone number, city, and state. In addition, we enriched the data with secondary fields such as website URL, zip code (when available), and the source of information (e.g., Google Maps, Yelp, Craigslist, Facebook, or Google Search). We also inferred categories like “House Cleaning Service” or “Maid Service,” and captured ratings and review counts from platforms such as Google or Yelp, when present. Other fields included hourly rates in USD (if posted on Craigslist or Facebook), publicly advertised offers or coupons, the last-seen timestamp in ISO date format, and free-text notes (for example, “move-out cleaning” or “pet friendly”). Importantly, we only collected public business contact information intended for customer inquiries, used official APIs where appropriate, respected rate limits, and followed all platform rules.We collected only public business contact information intended for customer inquiries. We used official APIs where appropriate, respected rate limits, and followed platform rules.

(1) Google Search and Google Maps

Google Search & Maps grid queries across cities, parsing cleaning categories with filters for name, contact, rating, reviews, and location.

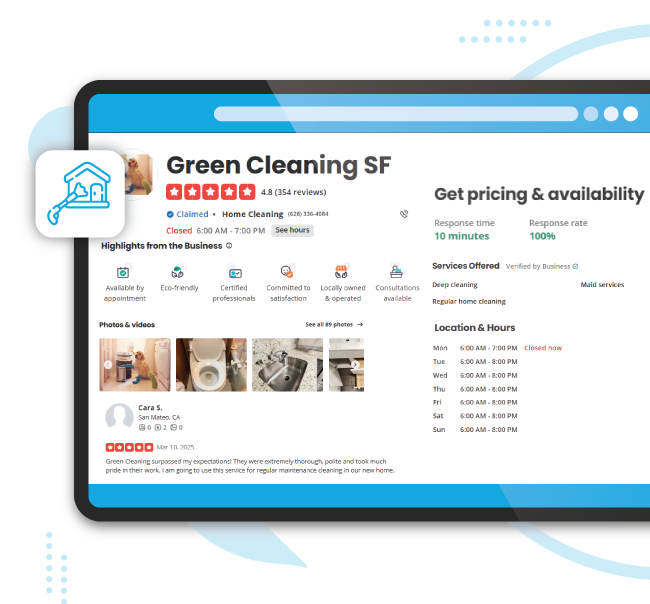

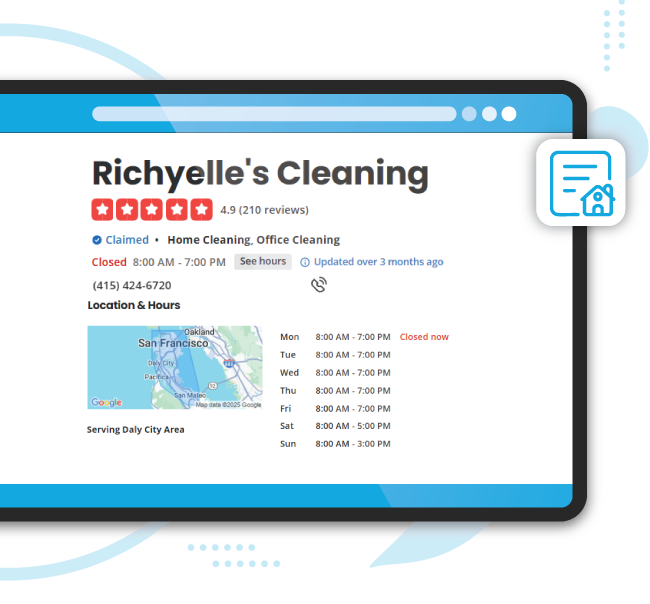

(2) Yelp

Yelp data focused on cleaning categories, sweeping all cities, adding ratings, reviews, and reputation details for accurate lead scoring.

(3) Craigslist

Craigslist scans targeted household cleaning services, capturing rates, service areas, promos, and publicly posted phone or email contacts.

(4) Facebook Marketplace and Public Groups

Facebook Marketplace and groups searched cleaning terms, capturing public listings with contacts, offers, rate hints, and service coverage details.

(5) Website Enrichment

Website enrichment captured public emails from contact pages, noted residential service language for classification, and avoided gated or private content.

The most important rule was relevance. We needed home cleaning and maid services only. To enforce that rule, we used layered checks:

Allow List Signals

Deny List Signals

Model and Rules

This method cut out noise and kept precision high for residential maid services.

Validation

Deduplication

Sampling and Audits

We documented the few edge cases that were tricky to classify and how we resolved them.

Below are illustrative samples that show the shape of the delivered dataset. These are examples only. They are not from a live run.

| Business Name | Phone | Website | City | State | Rating | Reviews | Hourly Rate (USD) | Offer / Coupon | Source | Last Seen | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Sparkle & Shine Maid Service | hello@sparkleshinemaids.com | +1 415-550-1337 | sparkleshinemaids.com | San Francisco | CA | 4.7 | 129 | 45 | 20% off first clean | google_maps | 2025-08-01 |

| HomeFresh House Cleaning | book@homefreshclean.com | +1 630-555-0119 | homefreshclean.com | Chicago | IL | 4.6 | 212 | 38 | New client $15 discount | yelp | 2025-08-02 |

| Magic Broom Residential Cleaning | — | +1 718-555-0102 | — | Houston | TX | 4.2 | 87 | 35 | — | 2025-08-03 | |

| Coastal Maids of St. Pete | contact@coastalmaidsfl.com | +1 727-555-0144 | coastalmaidsfl.com | St. Petersburg | FL | 4.8 | 94 | — | $25 off move-out cleaning | google_maps | 2025-08-04 |

| TidyNest Home Cleaning | tidynestcleaning@gmail.com | +1 617-555-0177 | — | Boston | MA | 4.5 | 65 | 42 | Refer-a-friend $10 credit | craigslist | 2025-08-05 |

Offer and pricing examples from public listings

When posts listed a rate or promotion, we captured it. If not, those fields were left blank.

1. Geography and source sampling

We drew random samples from every state and from each source type. Each sample went to a human reviewer who confirmed residential focus, verified contact fields, and flagged edge cases for re-check.

2. Email and phone checks

3. Classification audits

We ran periodic blind checks on 500-record samples. Each record was labeled by a human. Scores were compared against the model. The model and rules were adjusted when we found systematic drift.

4. Deduplication audits

Potential duplicate clusters were reviewed before the final export. Where two rows referred to the same entity, we kept the more complete record and merged metadata from the rest.

File formats

CRM mapping

Documentation

Platform variability

Ambiguous categories

Data drift

Compliance

Where the market is dense

What offers move the market

Who to call first

Pricing cues

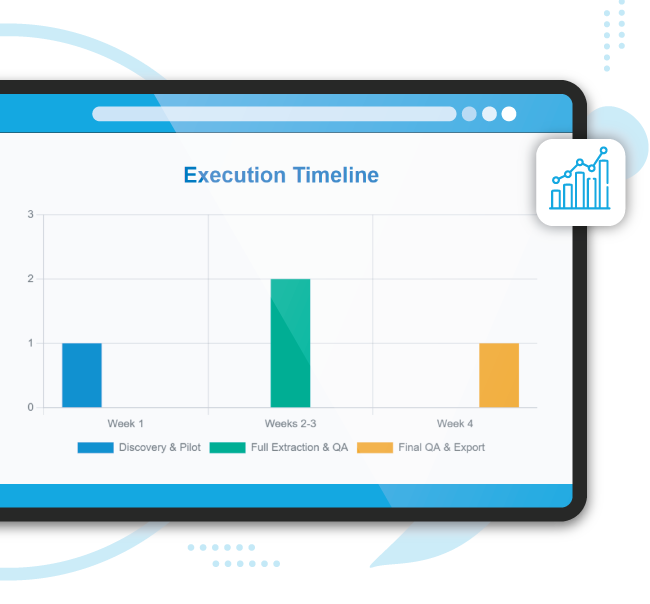

Week 1

Weeks 2–3

Week 4

Included

Excluded

Outbound

Partnerships

Operations

If you want a US-wide, strictly residential housecleaning dataset with strong contact coverage and clean formatting for CRM import, iWeb Data Scraping can build it to your specs. Tell us your target states, any rating thresholds, and your preferred file format. We will deliver a dataset your sales team can start using right away.

In conclusion, this 30,000-record case study highlights how iWeb Data Scraping’s expertise in Web Scraping for Sales Lead Generation and MAP Monitoring Services for Smarter Business Decisions can transform scattered online information into a powerful, sales-ready asset. By combining precise category targeting, rigorous data quality checks, and compliance-driven sourcing, we delivered a nationwide dataset that empowers smarter outreach, market analysis, and competitive strategy—helping businesses act faster, target better, and grow confidently.

We start by signing a Non-Disclosure Agreement (NDA) to protect your ideas.

Our team will analyze your needs to understand what you want.

You'll get a clear and detailed project outline showing how we'll work together.

We'll take care of the project, allowing you to focus on growing your business.