A deep understanding of the business domain is crucial for companies to stay competitive. However, staying updated with the latest news and gathering sufficient knowledge can take time and effort. To expedite the process and acquire relevant information rapidly, scraping news sites has become indispensable in the news industry.

Web scraping news articles enables businesses to access essential updates and news about their industry swiftly. It allows for quick knowledge acquisition, saving valuable time. This article will delve into the essentials of news scraping, providing a comprehensive guide on how to scrape news sites effortlessly and efficiently.

News scraping automatically extracts data from news websites, explicitly gathering information from public online media sources. It involves retrieving updates, press releases, and other relevant content from news websites through automated means.

Scraping news sites allows businesses to access valuable public data, including customer reviews, product information, and important announcements. This data can provide crucial insights and help companies stay informed about industry trends and developments.

It is important to note that extracting news data should only be performed on publicly available data from news websites and should adhere to legal and ethical guidelines.

News scraping using a news article scraper is crucial in enhancing compliance, providing up-to-date information, verifying authenticity, identifying risks, and delivering key business announcements. It is a valuable tool for businesses to gain insights, make informed decisions, and stay competitive in their respective industries. Scraping News Articles from the CNN website offers numerous benefits and plays a pivotal role in various aspects of business operations:

Enhances Compliance and Operations: By scraping news sites, businesses can stay updated on regulatory changes, industry guidelines, and compliance requirements. It helps them ensure their operations align with legal and industry standards.

Provides Up-to-Date Information: Scrape CNN News Articles using Python to enable businesses to access real-time and up-to-date information about industry trends, market conditions, and competitor activities. It helps companies make informed decisions and stay ahead in the market.

Offers Verified and Authentic Information: News sites are reputable sources of verified and trustworthy information. News articles data scraping services allow businesses to gather reliable insights, news updates, and announcements from credible sources.

Identifies Mitigation and Risks: By scraping news sites, businesses can identify potential risks, such as negative market trends, legal issues, or reputational risks. This early awareness allows for proactive mitigation strategies and informed risk management.

Key Business Announcements: News sites often feature important business announcements, such as product launches, mergers and acquisitions, partnerships, and financial reports. Scraping this information keeps businesses well-informed about significant industry developments and potential business opportunities.

Python provides a robust ecosystem for web scraping, making it a popular choice for developers and data analysts seeking to extract data from websites efficiently. It is a popular programming language widely used for web scraping due to its simplicity, extensive libraries, and ease of use. Web scraping automatically extracts data from websites by sending HTTP requests, parsing the HTML content, and extracting the desired information.

Python offers several powerful libraries and tools that make web scraping relatively straightforward. Some of the critical libraries used in web scraping with Python include:

Requests: This library lets you send HTTP requests to a website and retrieve the HTML content. It handles the underlying communication and simplifies the process of making requests.

Beautiful Soup: This library is helpful for parsing HTML or XML documents. It provides a convenient way to navigate and search the parsed data structure, making extracting the required information from the web page accessible.

Scrapy: Scrapy is a comprehensive web scraping framework for Python. It provides a set of built-in functionalities and tools to handle complex scraping tasks. Scrapy handles request scheduling, response parsing, and data extraction, making it suitable for larger-scale scraping projects.

Selenium: Selenium helps automate web browsers. It allows you to interact with websites that rely heavily on JavaScript or require user interactions. Selenium can be helpful when scraping dynamic websites that generate content dynamically through JavaScript.

The project involves creating a web application that collects real-time data from multiple newspapers and generates a summary of various topics mentioned in the news articles. It requires a Machine Learning algorithm for classifying news articles into different categories, a web scraping approach to retrieve the latest headlines from various newspapers, and an active web service that presents the results to the user.

The web application will employ a Machine Learning algorithm to classify news articles based on their content, enabling the prediction of the category to which a particular article belongs. This classification task will help organize the news items and provide a structured overview of the topics covered.

A web scraping approach will extract the latest headlines from multiple newspapers. This automated process will retrieve the headlines from the news websites and collect the required information for further analysis and summarization.

Follow the following steps to accomplish the task of scraping news articles and utilizing a classification model:

a.Quick Overview of Web Pages and HTML: Understand how web pages are structured using HTML (Hypertext Markup Language). This knowledge will help identify and extract the desired data from the news websites.

b.Python Web Scraping with BeautifulSoup: Utilize the BeautifulSoup library in Python for web scraping. BeautifulSoup simplifies the process of parsing HTML and extracting relevant data. It provides convenient methods for navigating the HTML tree structure and retrieving specific elements such as headlines, article text, and other relevant information from the news pages.

Following these steps, you can create a script that scrapes recent news stories from multiple newspapers, saves the text, and feeds it into the classification model for category prediction. Combining web scraping, classification, and app development will enable you to build a comprehensive solution for extracting news data and making category predictions.

It is essential to understand how websites operate and the technologies involved in extracting news stories or any other content from a website effectively. When we visit a website by entering its URL into a web browser like Google Chrome, Firefox, or Internet Explorer, we are interacting with three leading technologies:

HTML: HTML is the standard language used for structuring and adding content to webpages. It defines the elements and layout of a webpage, allowing us to include text, graphics, links, and other multimedia. HTML tags provide the structure and hierarchy of the content on a webpage.

CSS: CSS is a programming language that complements HTML by controlling a webpage's visual presentation and layout. It allows us to customize the appearance of elements, such as colors, fonts, sizes, margins, and more. CSS provides flexibility in styling and helps create a visually appealing and consistent design across web pages.

JavaScript: While not explicitly mentioned, JavaScript is another vital website technology. It is a programming language that adds interactivity and dynamic functionality to web pages. JavaScript allows for actions like data validation, animations, form submission handling, and more. It is often used with HTML and CSS to create interactive and responsive web experiences.

Activate the Python installation by activating the following script:

Now, install the request.

To extract data from a website, we will utilize the requests module to obtain the HTML code of the webpage. Then, we can navigate through the HTML structure using the BeautifulSoup package. Two essential instructions will suffice for our purposes:

find_all(element tag, attribute): This instruction helps identify specific HTML elements on a webpage based on their tag and attributes. It returns all the matching elements of the specified kind. Alternatively, find () can retrieve only the first matching element.

get_text(): This command allows us to extract the text content from a particular HTML element.

To proceed, we need to access the HTML code of the webpage. For instance, in the Chrome browser, we can right-click on the webpage, select "View Page Source," and find ourselves in the source code. We can also use the Ctrl+F or Cmd+F shortcut to search for specific elements within the source code.

After identifying the relevant elements, we can utilize the requests module to retrieve the HTML code of the webpage. Then, using BeautifulSoup, we can extract the desired elements based on their tags and attributes.

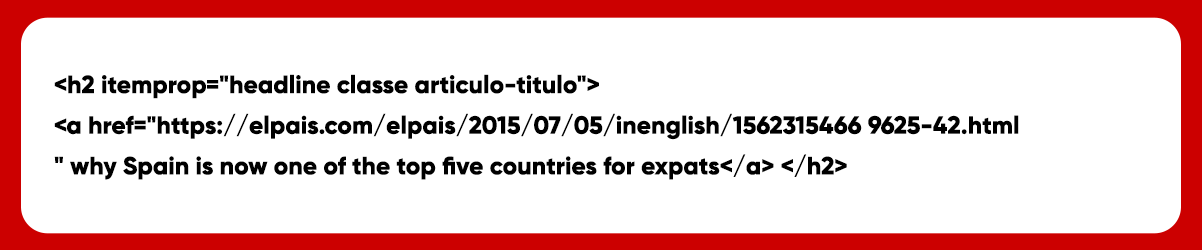

We need to explore the HTML code to locate the news articles on the website. After examining it, we can observe that each article on the front page possesses the following elements:

The Header: It is an <h2>(heading-2) element with attributes itemprop="headline" and class="articulo-titulo." The text content of the header lies within an <a> element with an href attribute.

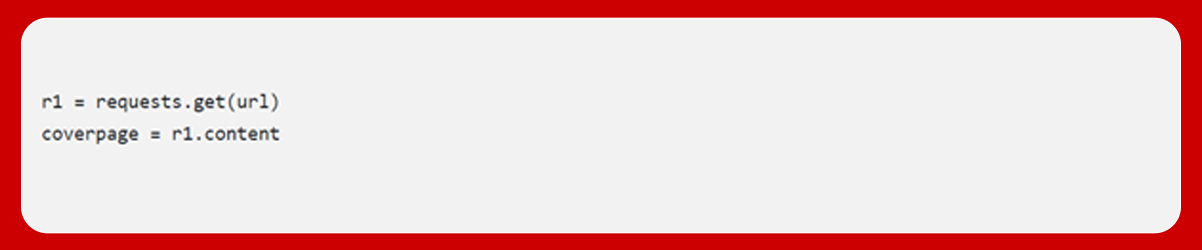

We will get the HTML content via the requests module and save it to cover page variables.

We will now make soup.

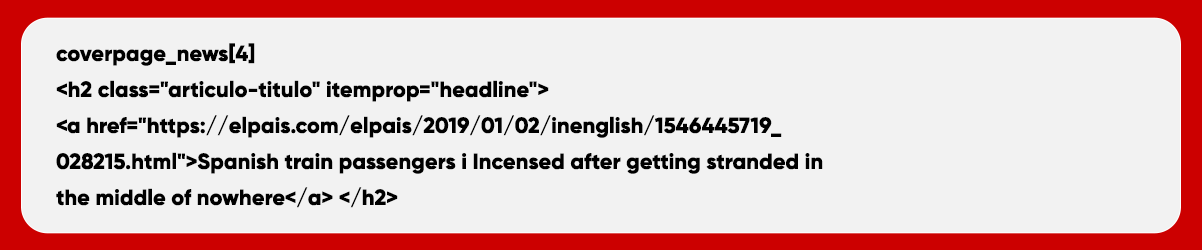

Finally, we will get our desired elements.

Retrieving occurrences using the final all option will return a list with each element of the news article.

Extract the text using the following command.

Type the following to obtain the value of specific.

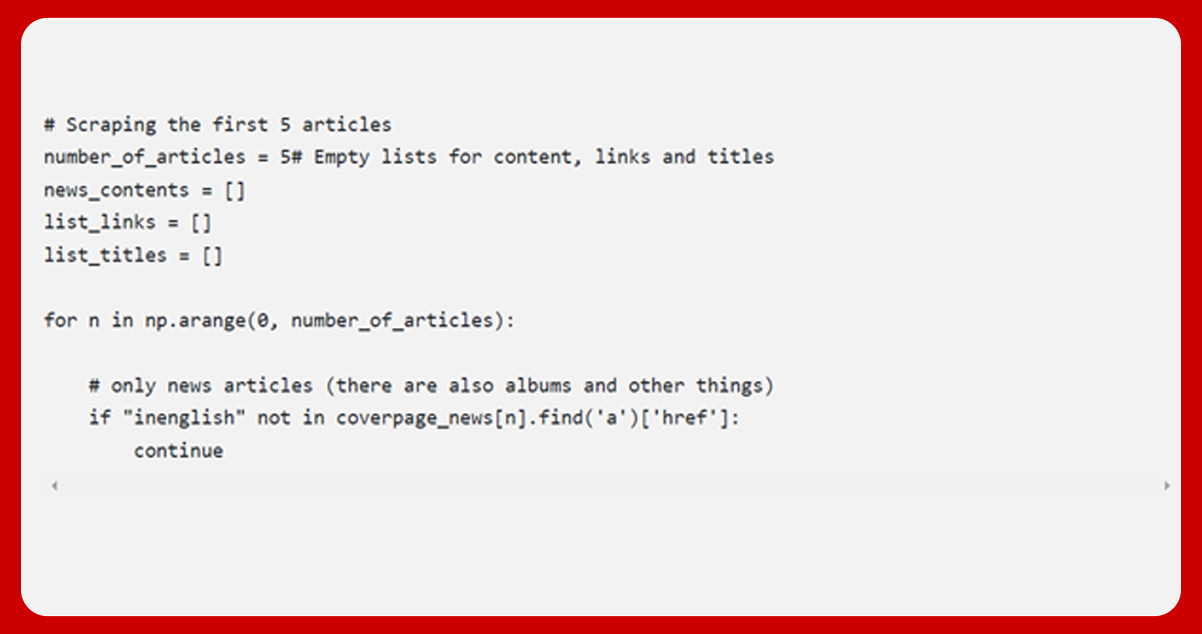

Now, use the href attribute to obtain the content of each news article. Below is the complete process code.

For further details, contact iWeb Data Scraping now! You can also reach us for all your web scraping service and mobile app data scraping needs